Regression and correlation analysis are statistical research methods. These are the most common ways to show the dependence of a parameter on one or more independent variables.

Below, using specific practical examples, we will consider these two very popular analyzes among economists. We will also give an example of obtaining results when combining them.

Regression Analysis in Excel

Shows the influence of some values (independent, independent) on the dependent variable. For example, how does the number of economically active population depend on the number of enterprises, wages and other parameters. Or: how do foreign investments, energy prices, etc. affect the level of GDP.

The result of the analysis allows you to highlight priorities. And based on the main factors, predict, plan the development of priority areas, and make management decisions.

Regression happens:

- linear (y = a + bx);

- parabolic (y = a + bx + cx 2);

- exponential (y = a * exp(bx));

- power (y = a*x^b);

- hyperbolic (y = b/x + a);

- logarithmic (y = b * 1n(x) + a);

- exponential (y = a * b^x).

Let's look at an example of building a regression model in Excel and interpreting the results. Let's take the linear type of regression.

Task. At 6 enterprises, the average monthly salary and the number of quitting employees were analyzed. It is necessary to determine the dependence of the number of quitting employees on the average salary.

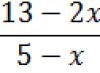

The linear regression model looks like this:

Y = a 0 + a 1 x 1 +…+a k x k.

Where a are regression coefficients, x are influencing variables, k is the number of factors.

In our example, Y is the indicator of quitting employees. The influencing factor is wages (x).

Excel has built-in functions that can help you calculate the parameters of a linear regression model. But the “Analysis Package” add-on will do this faster.

Let's activate a powerful analytical tool:

Once activated, the add-on will be available in the Data tab.

Now let's do the regression analysis itself.

First of all, we pay attention to R-squared and coefficients.

R-squared is the coefficient of determination. In our example – 0.755, or 75.5%. This means that the calculated parameters of the model explain 75.5% of the relationship between the studied parameters. The higher the coefficient of determination, the better the model. Good - above 0.8. Bad – less than 0.5 (such an analysis can hardly be considered reasonable). In our example – “not bad”.

The coefficient 64.1428 shows what Y will be if all variables in the model under consideration are equal to 0. That is, the value of the analyzed parameter is also influenced by other factors not described in the model.

The coefficient -0.16285 shows the weight of variable X on Y. That is, the average monthly salary within this model affects the number of quitters with a weight of -0.16285 (this is a small degree of influence). The “-” sign indicates a negative impact: the higher the salary, the fewer people quit. Which is fair.

Correlation Analysis in Excel

Correlation analysis helps determine whether there is a relationship between indicators in one or two samples. For example, between the operating time of a machine and the cost of repairs, the price of equipment and the duration of operation, the height and weight of children, etc.

If there is a connection, then does an increase in one parameter lead to an increase (positive correlation) or a decrease (negative) of the other. Correlation analysis helps the analyst determine whether the value of one indicator can be used to predict the possible value of another.

The correlation coefficient is denoted by r. Varies from +1 to -1. The classification of correlations for different areas will be different. When the coefficient is 0, there is no linear relationship between samples.

Let's look at how to find the correlation coefficient using Excel.

To find paired coefficients, the CORREL function is used.

Objective: Determine whether there is a relationship between the operating time of a lathe and the cost of its maintenance.

Place the cursor in any cell and press the fx button.

- In the “Statistical” category, select the CORREL function.

- Argument “Array 1” - the first range of values – machine operating time: A2:A14.

- Argument “Array 2” - second range of values – repair cost: B2:B14. Click OK.

To determine the type of connection, you need to look at the absolute number of the coefficient (each field of activity has its own scale).

For correlation analysis of several parameters (more than 2), it is more convenient to use “Data Analysis” (the “Analysis Package” add-on). You need to select correlation from the list and designate the array. All.

The resulting coefficients will be displayed in the correlation matrix. Like this:

Correlation and regression analysis

In practice, these two techniques are often used together.

Example:

Now the regression analysis data has become visible.

During their studies, students very often encounter a variety of equations. One of them - the regression equation - is discussed in this article. This type of equation is used specifically to describe the characteristics of the relationship between mathematical parameters. This type of equality is used in statistics and econometrics.

Definition of regression

In mathematics, regression means a certain quantity that describes the dependence of the average value of a set of data on the values of another quantity. The regression equation shows, as a function of a particular characteristic, the average value of another characteristic. The regression function has the form of a simple equation y = x, in which y acts as a dependent variable, and x as an independent variable (feature-factor). In fact, regression is expressed as y = f (x).

What are the types of relationships between variables?

In general, there are two opposing types of relationships: correlation and regression.

The first is characterized by the equality of conditional variables. In this case, it is not reliably known which variable depends on the other.

If there is no equality between the variables and the conditions say which variable is explanatory and which is dependent, then we can talk about the presence of a connection of the second type. In order to construct a linear regression equation, it will be necessary to find out what type of relationship is observed.

Types of regressions

Today, there are 7 different types of regression: hyperbolic, linear, multiple, nonlinear, pairwise, inverse, logarithmically linear.

Hyperbolic, linear and logarithmic

The linear regression equation is used in statistics to clearly explain the parameters of the equation. It looks like y = c+t*x+E. A hyperbolic equation has the form of a regular hyperbola y = c + m / x + E. A logarithmically linear equation expresses the relationship using a logarithmic function: In y = In c + m * In x + In E.

Multiple and nonlinear

The two more complex types of regression are multiple and nonlinear. The multiple regression equation is expressed by the function y = f(x 1, x 2 ... x c) + E. In this situation, y acts as a dependent variable, and x acts as an explanatory variable. The E variable is stochastic; it includes the influence of other factors in the equation. The nonlinear regression equation is a bit controversial. On the one hand, relative to the indicators taken into account, it is not linear, but on the other hand, in the role of evaluating indicators, it is linear.

Inverse and paired types of regressions

An inverse is a type of function that needs to be converted to a linear form. In the most traditional application programs, it has the form of a function y = 1/c + m*x+E. A pairwise regression equation shows the relationship between the data as a function of y = f (x) + E. Just like in other equations, y depends on x, and E is a stochastic parameter.

Concept of correlation

This is an indicator demonstrating the existence of a relationship between two phenomena or processes. The strength of the relationship is expressed as a correlation coefficient. Its value fluctuates within the interval [-1;+1]. A negative indicator indicates the presence of feedback, a positive indicator indicates direct feedback. If the coefficient takes a value equal to 0, then there is no relationship. The closer the value is to 1, the stronger the relationship between the parameters; the closer to 0, the weaker it is.

Methods

Correlation parametric methods can assess the strength of the relationship. They are used on the basis of distribution estimation to study parameters that obey the law of normal distribution.

The parameters of the linear regression equation are necessary to identify the type of dependence, the function of the regression equation and evaluate the indicators of the selected relationship formula. The correlation field is used as a connection identification method. To do this, all existing data must be depicted graphically. All known data must be plotted in a rectangular two-dimensional coordinate system. This creates a correlation field. The values of the describing factor are marked along the abscissa axis, while the values of the dependent factor are marked along the ordinate axis. If there is a functional relationship between the parameters, they are lined up in the form of a line.

If the correlation coefficient of such data is less than 30%, we can speak of an almost complete absence of connection. If it is between 30% and 70%, then this indicates the presence of medium-close connections. A 100% indicator is evidence of a functional connection.

A nonlinear regression equation, just like a linear one, must be supplemented with a correlation index (R).

Correlation for Multiple Regression

The coefficient of determination is a measure of the square of multiple correlation. He speaks of the close relationship of the presented set of indicators with the characteristic being studied. It can also talk about the nature of the influence of parameters on the result. The multiple regression equation is estimated using this indicator.

In order to calculate the multiple correlation indicator, it is necessary to calculate its index.

Least squares method

This method is a way to estimate regression factors. Its essence is to minimize the sum of squared deviations obtained as a result of the dependence of the factor on the function.

A pairwise linear regression equation can be estimated using such a method. This type of equation is used when a paired linear relationship is detected between indicators.

Equation Parameters

Each parameter of the linear regression function has a specific meaning. The paired linear regression equation contains two parameters: c and m. The parameter m demonstrates the average change in the final indicator of the function y, provided that the variable x decreases (increases) by one conventional unit. If the variable x is zero, then the function is equal to the parameter c. If the variable x is not zero, then the factor c does not carry economic meaning. The only influence on the function is the sign in front of the factor c. If there is a minus, then we can say that the change in the result is slow compared to the factor. If there is a plus, then this indicates an accelerated change in the result.

Each parameter that changes the value of the regression equation can be expressed through an equation. For example, factor c has the form c = y - mx.

Grouped data

There are task conditions in which all information is grouped by attribute x, but for a certain group the corresponding average values of the dependent indicator are indicated. In this case, the average values characterize how the indicator depending on x changes. Thus, the grouped information helps to find the regression equation. It is used as an analysis of relationships. However, this method has its drawbacks. Unfortunately, average indicators are often subject to external fluctuations. These fluctuations do not reflect the pattern of the relationship; they just mask its “noise.” Averages show patterns of relationships much worse than a linear regression equation. However, they can be used as a basis for finding an equation. By multiplying the number of an individual population by the corresponding average, one can obtain the sum y within the group. Next, you need to add up all the amounts received and find the final indicator y. It is a little more difficult to make calculations with the sum indicator xy. If the intervals are small, we can conditionally take the x indicator for all units (within the group) to be the same. You should multiply it with the sum of y to find out the sum of the products of x and y. Next, all the amounts are added together and the total amount xy is obtained.

Multiple pairwise regression equation: assessing the importance of a relationship

As discussed earlier, multiple regression has a function of the form y = f (x 1,x 2,…,x m)+E. Most often, such an equation is used to solve the problem of supply and demand for a product, interest income on repurchased shares, and to study the causes and type of the production cost function. It is also actively used in a wide variety of macroeconomic studies and calculations, but at the microeconomics level this equation is used a little less frequently.

The main task of multiple regression is to build a model of data containing a huge amount of information in order to further determine what influence each of the factors individually and in their totality has on the indicator that needs to be modeled and its coefficients. The regression equation can take on a wide variety of values. In this case, to assess the relationship, two types of functions are usually used: linear and nonlinear.

The linear function is depicted in the form of the following relationship: y = a 0 + a 1 x 1 + a 2 x 2,+ ... + a m x m. In this case, a2, a m are considered “pure” regression coefficients. They are necessary to characterize the average change in parameter y with a change (decrease or increase) in each corresponding parameter x by one unit, subject to stable values of other indicators.

Nonlinear equations have, for example, the form of a power function y=ax 1 b1 x 2 b2 ...x m bm. In this case, the indicators b 1, b 2 ..... b m are called elasticity coefficients, they demonstrate how the result will change (by how much%) with an increase (decrease) in the corresponding indicator x by 1% and with a stable indicator of other factors.

What factors need to be taken into account when constructing multiple regression

In order to correctly build multiple regression, it is necessary to find out which factors should be paid special attention to.

It is necessary to have some understanding of the nature of the relationships between economic factors and what is being modeled. Factors that will need to be included must meet the following criteria:

- Must be subject to quantitative measurement. In order to use a factor that describes the quality of an object, in any case it should be given a quantitative form.

- There should be no intercorrelation of factors, or functional relationship. Such actions most often lead to irreversible consequences - the system of ordinary equations becomes unconditional, and this entails its unreliability and unclear estimates.

- In the case of a huge correlation indicator, there is no way to find out the isolated influence of factors on the final result of the indicator, therefore, the coefficients become uninterpretable.

Construction methods

There are a huge number of methods and methods that explain how you can select factors for an equation. However, all these methods are based on the selection of coefficients using a correlation indicator. Among them are:

- Elimination method.

- Switching method.

- Stepwise regression analysis.

The first method involves filtering out all coefficients from the total set. The second method involves introducing many additional factors. Well, the third is the elimination of factors that were previously used for the equation. Each of these methods has a right to exist. They have their pros and cons, but they can all solve the issue of eliminating unnecessary indicators in their own way. As a rule, the results obtained by each individual method are quite close.

Multivariate analysis methods

Such methods of determining factors are based on consideration of individual combinations of interrelated characteristics. These include discriminant analysis, shape recognition, principal component analysis, and cluster analysis. In addition, there is also factor analysis, but it appeared due to the development of the component method. All of them apply in certain circumstances, subject to certain conditions and factors.

After correlation analysis has revealed the presence of statistical relationships between variables and assessed the degree of their closeness, we usually move on to a mathematical description of a specific type of dependency using regression analysis. For this purpose, a class of functions is selected that connects the resultant indicator y and the arguments x 1, x 2, ..., x k, the most informative arguments are selected, estimates of the unknown values of the parameters of the communication equation are calculated, and the properties of the resulting equation are analyzed.

The function f(x 1, x 2,..., x k) describing the dependence of the average value of the resultant characteristic y on the given values of the arguments is called the regression function (equation). The term “regression” (Latin -regression - retreat, return to something) was introduced by the English psychologist and anthropologist F. Galton and is associated exclusively with the specifics of one of the first concrete examples in which this concept was used. Thus, processing statistical data in connection with the analysis of the heredity of height, F. Galton found that if fathers deviate from the average height of all fathers by x inches, then their sons deviate from the average height of all sons by less than x inches. The identified trend was called “regression to the mean.” Since then, the term “regression” has been widely used in the statistical literature, although in many cases it does not accurately characterize the concept of statistical dependence.

To accurately describe the regression equation, it is necessary to know the distribution law of the effective indicator y. In statistical practice, one usually has to confine oneself to the search for suitable approximations for the unknown true regression function, since the researcher does not have exact knowledge of the conditional probability distribution law of the analyzed resultant indicator y for given values of the argument x.

Let's consider the relationship between true f(x) = M(y1x), model regression? and regression estimate y. Let the effective indicator y be related to the argument x by the relation:

where is a random variable that has a normal distribution law, and Me = 0 and D e = y 2. The true regression function in this case has the form: f (x) = M(y/x) = 2x 1.5.

Let us assume that we do not know the exact form of the true regression equation, but we have nine observations of a two-dimensional random variable related by the relation yi = 2x1.5 + e, and presented in Fig. 1

Figure 1 - The relative position of the truth f (x) and the theoretical? regression models

Location of points in Fig. 1 allows us to limit ourselves to the class of linear dependencies of the form? = in 0 + in 1 x. Using the least squares method, we find the estimate of the regression equation y = b 0 + b 1 x. For comparison, in Fig. 1 shows graphs of the true regression function y = 2x 1.5, the theoretical approximating regression function? = in 0 + in 1 x .

Since we made a mistake in choosing the class of the regression function, and this is quite common in the practice of statistical research, our statistical conclusions and estimates will turn out to be erroneous. And no matter how much we increase the volume of observations, our sample estimate y will not be close to the true regression function f (x). If we had chosen the class of regression functions correctly, then the inaccuracy in describing f(x) using? could only be explained by sample limitations.

In order to best restore, from the original statistical data, the conditional value of the effective indicator y(x) and the unknown regression function f(x) = M(y/x), the following adequacy criteria (loss functions) are most often used.

Least squares method. According to it, the square of the deviation of the observed values of the effective indicator y, (i = 1,2,..., n) from the model values,? = f(x i), where x i is the value of the argument vector in the i-th observation: ?(y i - f(x i) 2 > min. The resulting regression is called mean square.

Method of smallest modules. According to it, the sum of absolute deviations of the observed values of the effective indicator from the modular values is minimized. And we get,? = f(x i), mean absolute median regression? |y i - f(x i)| >min.

Regression analysis is a method of statistical analysis of the dependence of a random variable y on variables x j = (j=1,2,..., k), considered in regression analysis as non-random variables, regardless of the true distribution law of x j.

It is usually assumed that a random variable y has a normal distribution law with a conditional mathematical expectation y, which is a function of the arguments x/ (/ = 1, 2,..., k) and a constant variance y 2 independent of the arguments.

In general, the linear regression analysis model has the form:

Y = Y k j=0 V j ts j(x 1 , x 2 . . .. ,x k)+E

where q j is some function of its variables - x 1, x 2. . .. ,x k, E is a random variable with zero mathematical expectation and variance y 2.

In regression analysis, the type of regression equation is chosen based on the physical nature of the phenomenon being studied and the results of observation.

Estimates of the unknown parameters of the regression equation are usually found using the least squares method. Below we will dwell on this problem in more detail.

Bivariate linear regression equation. Let us assume, based on the analysis of the phenomenon under study, that on the “average” y is a linear function of x, i.e. there is a regression equation

y=M(y/x)=in 0 + in 1 x)

where M(y1x) is the conditional mathematical expectation of the random variable y for a given x; at 0 and at 1 - unknown parameters of the general population, which must be estimated based on the results of sample observations.

Suppose that to estimate parameters at 0 and at 1, a sample of size n is taken from a two-dimensional population (x, y), where (x, y,) is the result of the i-th observation (i = 1, 2,..., n) . In this case, the regression analysis model has the form:

y j = in 0 + in 1 x+e j .

where e j are independent normally distributed random variables with zero mathematical expectation and variance y 2, i.e. M e j. = 0;

D e j .= y 2 for all i = 1, 2,..., n.

According to the least squares method, as estimates of the unknown parameters at 0 and at 1, one should take such values of the sample characteristics b 0 and b 1 that minimize the sum of squared deviations of the values of the resultant characteristic for i from the conditional mathematical expectation? i

We will consider the methodology for determining the influence of marketing characteristics on the profit of an enterprise using the example of seventeen typical enterprises with average sizes and indicators of economic activity.

When solving the problem, the following characteristics were taken into account, identified as the most significant (important) as a result of the questionnaire survey:

* innovative activity of the enterprise;

* planning the range of products produced;

* formation of pricing policy;

* public relations;

* sales system;

* employee incentive system.

Based on a system of comparisons by factors, square matrices of adjacency were constructed, in which the values of relative priorities were calculated for each factor: innovative activity of the enterprise, planning of the range of products, formation of pricing policy, advertising, public relations, sales system, employee incentive system.

Estimates of priorities for the factor “relation with the public” were obtained as a result of a survey of enterprise specialists. The following notations are accepted: > (better), > (better or the same), = (same),< (хуже или одинаково), <

Next, the task of comprehensive assessment of the enterprise’s marketing level was solved. When calculating the indicator, the significance (weight) of the considered partial characteristics was determined and the problem of linear convolution of partial indicators was solved. Data processing was carried out using specially developed programs.

Next, a comprehensive assessment of the enterprise's marketing level is calculated - the marketing coefficient, which is entered in Table 1. In addition, the table includes indicators characterizing the enterprise as a whole. The data in the table will be used to perform regression analysis. The resultant attribute is profit. Along with the marketing coefficient, the following indicators were used as factor indicators: the volume of gross output, the cost of fixed assets, the number of employees, and the specialization coefficient.

Table 1 - Initial data for regression analysis

According to the table data and on the basis of factors with the most significant values of correlation coefficients, regression functions of the dependence of profit on factors were constructed.

The regression equation in our case will take the form:

The quantitative influence of the factors discussed above on the amount of profit is indicated by the coefficients of the regression equation. They show how many thousand rubles its value changes when the factor characteristic changes by one unit. As follows from the equation, an increase in the marketing mix coefficient by one unit gives an increase in profit by 1547.7 thousand rubles. This suggests that improving marketing activities has enormous potential for improving the economic performance of enterprises.

When studying marketing effectiveness, the most interesting and most important factor is factor X5 - the marketing coefficient. In accordance with the theory of statistics, the advantage of the existing multiple regression equation is the ability to evaluate the isolated influence of each factor, including the marketing factor.

The results of the regression analysis have a wider application than for calculating the parameters of the equation. The criterion for classifying (Kef) enterprises as relatively better or relatively worse is based on the relative indicator of the result:

where Y facti is the actual value of the i-th enterprise, thousand rubles;

Y calculated - the amount of profit of the i-th enterprise, obtained by calculation using the regression equation

In terms of the problem being solved, the value is called the “efficiency coefficient”. The activity of an enterprise can be considered effective in cases where the value of the coefficient is greater than one. This means that the actual profit is greater than the average profit over the sample.

Actual and estimated profit values are presented in table. 2.

Table 2 - Analysis of the resulting characteristic in the regression model

Analysis of the table shows that in our case, the activities of enterprises 3, 5, 7, 9, 12, 14, 15, 17 for the period under review can be considered successful.

Regression analysis is one of the most popular methods of statistical research. It can be used to establish the degree of influence of independent variables on the dependent variable. Microsoft Excel has tools designed to perform this type of analysis. Let's look at what they are and how to use them.

But, in order to use the function that allows you to perform regression analysis, you first need to activate the Analysis Package. Only then the tools necessary for this procedure will appear on the Excel ribbon.

Now when we go to the tab "Data", on the ribbon in the toolbox "Analysis" we will see a new button - "Data Analysis".

Types of Regression Analysis

There are several types of regressions:

- parabolic;

- sedate;

- logarithmic;

- exponential;

- demonstrative;

- hyperbolic;

- linear regression.

We will talk in more detail about performing the last type of regression analysis in Excel later.

Linear Regression in Excel

Below, as an example, is a table showing the average daily air temperature outside and the number of store customers for the corresponding working day. Let's find out using regression analysis exactly how weather conditions in the form of air temperature can affect the attendance of a retail establishment.

The general linear regression equation is as follows: Y = a0 + a1x1 +...+akhk. In this formula Y means a variable, the influence of factors on which we are trying to study. In our case, this is the number of buyers. Meaning x are the various factors that influence a variable. Options a are regression coefficients. That is, they are the ones who determine the significance of a particular factor. Index k denotes the total number of these very factors.

Analysis results analysis

The results of the regression analysis are displayed in the form of a table in the place specified in the settings.

One of the main indicators is R-square. It indicates the quality of the model. In our case, this coefficient is 0.705 or about 70.5%. This is an acceptable level of quality. Dependency less than 0.5 is bad.

Another important indicator is located in the cell at the intersection of the line "Y-intersection" and column "Odds". This indicates what value Y will have, and in our case, this is the number of buyers, with all other factors equal to zero. In this table, this value is 58.04.

Value at the intersection of the graph "Variable X1" And "Odds" shows the level of dependence of Y on X. In our case, this is the level of dependence of the number of store customers on temperature. A coefficient of 1.31 is considered a fairly high influence indicator.

As you can see, using Microsoft Excel it is quite easy to create a regression analysis table. But only a trained person can work with the output data and understand its essence.

In his works dating back to 1908. He described it using the example of the work of an agent selling real estate. In his records, the house sales specialist kept track of a wide range of input data for each specific building. Based on the results of the auction, it was determined which factor had the greatest influence on the transaction price.

Analysis of a large number of transactions yielded interesting results. The final price was influenced by many factors, sometimes leading to paradoxical conclusions and even obvious “outliers” when a house with high initial potential was sold at a reduced price.

The second example of the application of such an analysis is the work of which was entrusted with determining employee remuneration. The complexity of the task lay in the fact that it required not the distribution of a fixed amount to everyone, but its strict correspondence to the specific work performed. The appearance of many problems with practically similar solutions required a more detailed study of them at the mathematical level.

A significant place was allocated to the section “regression analysis”, which combined practical methods used to study dependencies that fall under the concept of regression. These relationships are observed between data obtained from statistical studies.

Among the many tasks to be solved, the main goals are three: determination of a general regression equation; constructing estimates of parameters that are unknowns that are part of the regression equation; testing of statistical regression hypotheses. In the course of studying the relationship that arises between a pair of quantities obtained as a result of experimental observations and constituting a series (set) of the type (x1, y1), ..., (xn, yn), they rely on the provisions of regression theory and assume that for one quantity Y there is a certain probability distribution, while the other X remains fixed.

The result Y depends on the value of the variable X; this dependence can be determined by various patterns, while the accuracy of the results obtained is influenced by the nature of the observations and the purpose of the analysis. The experimental model is based on certain assumptions that are simplified but plausible. The main condition is that the parameter X is a controlled quantity. Its values are set before the start of the experiment.

If a pair of uncontrolled variables XY is used during an experiment, then regression analysis is carried out in the same way, but methods are used to interpret the results, during which the relationship of the random variables under study is studied. Methods of mathematical statistics are not an abstract topic. They find application in life in various spheres of human activity.

In the scientific literature, the term linear regression analysis is widely used to define the above method. For variable X, the term regressor or predictor is used, and dependent Y variables are also called criterion variables. This terminology reflects only the mathematical dependence of the variables, but not the cause-and-effect relationship.

Regression analysis is the most common method used in processing the results of a wide variety of observations. Physical and biological dependencies are studied using this method; it is implemented both in economics and in technology. A lot of other fields use regression analysis models. Analysis of variance and multivariate statistical analysis work closely with this method of study.