Т" = с (i), Т" = 1………….(i), Т"" = 0…1……….(i) b(λ)……….(j) 1…0… …….(j) .

As a result of applying the right elementary operation, the matrix A(λ) is multiplied on the right by the corresponding matrix T.

Note that the matrix T" coincides with the matrix S", and the matrices T", T"" coincide with the matrices S", S"", if the indices i and j are swapped in the latter. Matrices of type S", S", S"" (or, what is the same, type T", T", T"") are called elementary.

Two λ-matrices A(λ) and B(λ) same sizes m x n are called equivalent, A(λ) ~ B(λ), if one can go from the matrix A(λ) to B(λ) using a chain of finite number elementary transformations. The equivalence relation has three main properties:

1) reflexivity: each matrix is equivalent to itself A(λ) ~ B(λ);

2) symmetry: if A(λ) ~ B(λ), then B(λ) ~ A(λ);

3) transitivity: if A(λ) ~ B(λ), and B(λ) ~ C(λ), then A(λ) ~ C(λ).

§2. Canonical form of the λ-matrix

It was shown above that the equivalence relation is transitive, symmetric and reflexive. It follows that the set of all λ-matrices of given sizes m x n is divided into disjoint classes equivalent matrices, i.e. into classes such that any two matrices from the same class are equivalent, and from different classes- are not equivalent to each other. The question arises about the canonical form of the λ-matrix characterizing this class equivalent λ-matrices.

A canonical diagonal λ-matrix of dimensions m x n is a λ-matrix whose main diagonal contains the polynomials E1(λ), E2(λ), ..., Ep(λ), where p is the smaller of the numbers m and n, and not equal to zero among these polynomials have leading coefficients equal to one, and each subsequent polynomial is divided by the previous one, yet the elements outside the main diagonal are equal to zero.

Theorem 1. Any λ-matrix can be reduced to a canonical diagonal form by a finite number of elementary transformations.

Proof. Let A(λ) be a rectangular polynomial matrix. Applying both left and right elementary operations to A(λ) we lead to the canonical diagonal form.

Among all non-zero elements аіј(λ) of the matrix A(λ), we take the element that has the smallest degree with respect to λ, and by appropriately rearranging the rows and columns we make it an element a11(λ). After this, we will find the quotients and remainders from dividing the polynomials аі1(λ) and а1ј(λ) by а11(λ):

аі1(λ) = а11(λ) qі1(λ) + rі1 (λ), а1ј(λ) = а11(λ) q1ј(λ) + r1ј(λ)

(i = 2, 3, …, m; j = 2, 3, …, n).

If at least one of the remainders rі1(λ), r1ј(λ) (i = 2, …, m; j = 2, …, n), for example r1ј (λ), is not identically zero, then, subtracting from j- of the first column, previously multiplied by q1ј(λ), we replace the element a1ј(λ) with the remainder r1ј(λ), which has a lower degree than a11(λ). Then we have the opportunity to again reduce the degree of the element on the left top corner matrix, placing in this place the element with the smallest degree relative to λ.

If all the remainders are r21(λ), … rm1(λ); r12(λ), …, r1n(λ) are identically zero, then, subtracting from the i-th row the first, multiplied previously by qі1(λ) (i = 2, …, m), and from the j-th column - the first , previously multiplied by q1ј(λ) (j = 2, …, n), we reduce our matrix to the form

а11(λ) 0 … 0

0 а22(λ) … а2n(λ)

….…………………… .

0 am2(λ) … amn(λ)

If at the same time at least one of the elements аіј(λ) (i = 2, …, m; j = 2, …, n) is not divisible by а11(λ) without a remainder, then by adding to the first column the column that contains this element, we will come to the previous case and, therefore, we will again be able to replace the element a11(λ) with a polynomial of lower degree.

Since the original element a11(λ) had a certain degree and the process of reducing this degree cannot continue indefinitely, then after a finite number of elementary operations we must obtain a matrix of the form

(*) 0 b22(λ) … b 2n(λ)

….…………………… ,

0 bm2 (λ) …bmn (λ)

in which all elements bіј(λ) are divisible by а1(λ) without remainder. If among these elements bіј(λ) there are not identically zero, then continuing the same reduction process for rows with numbers 2, …, m and columns with numbers 2, …, n, we will reduce the matrix (*) to the form

Thus, we have proven that an arbitrary rectangular polynomial matrix A(λ) is equivalent to some canonical diagonal one.

Definition. A polynomial matrix or -matrix is a rectangular matrix whose elements are polynomials in one variable  with numerical coefficients.

with numerical coefficients.

Over  -matrices can perform elementary transformations. These include:

-matrices can perform elementary transformations. These include:

Two  -matrices

-matrices  And

And  identical sizes are called equivalent:

identical sizes are called equivalent:  , if from the matrix

, if from the matrix  To

To  can be passed using a finite number of elementary transformations.

can be passed using a finite number of elementary transformations.

Example. Prove matrix equivalence

|

|

|

Solution.

.

.

.

.

Multiply the second line by (–1) and notice that

.

.

.

.

Plenty of everyone  -matrices of given sizes

-matrices of given sizes  is divided into disjoint classes of equivalent matrices. Matrices that are equivalent to each other form one class, and those that are not equivalent form another.

is divided into disjoint classes of equivalent matrices. Matrices that are equivalent to each other form one class, and those that are not equivalent form another.

Each class of equivalent matrices is characterized by a canonical, or normal,  -matrix of given dimensions.

-matrix of given dimensions.

Definition. Canonical, or normal,  -size matrix

-size matrix  called

called  -matrix with polynomials on the main diagonal, where r– the smaller of the numbers m And n

(

-matrix with polynomials on the main diagonal, where r– the smaller of the numbers m And n

( ), and polynomials that are not equal to zero have leading coefficients equal to 1, and each subsequent polynomial is divided by the previous one. All elements outside the main diagonal are 0.

), and polynomials that are not equal to zero have leading coefficients equal to 1, and each subsequent polynomial is divided by the previous one. All elements outside the main diagonal are 0.

From the definition it follows that if among the polynomials there are polynomials of degree zero, then they are at the beginning of the main diagonal. If there are zeros, they are at the end of the main diagonal.

Matrix  the previous example is canonical. Matrix

the previous example is canonical. Matrix

also canonical.

Every class  -matrix contains a unique canonical

-matrix contains a unique canonical  -matrix, i.e. each

-matrix, i.e. each  -matrix is equivalent to a unique canonical matrix, which is called the canonical form or normal form of this matrix.

-matrix is equivalent to a unique canonical matrix, which is called the canonical form or normal form of this matrix.

Polynomials on the main diagonal of the canonical form of a given  -matrices are called invariant factors of a given matrix.

-matrices are called invariant factors of a given matrix.

One of the methods for calculating invariant factors is to reduce the given  -matrices to canonical form.

-matrices to canonical form.

So, for the matrix  of the previous example, the invariant factors are

of the previous example, the invariant factors are

,

,

,

, ,

, .

.

From the above it follows that the presence of the same set of invariant factors is a necessary and sufficient condition for equivalence  -matrices

-matrices

Bringing  -matrices to canonical form reduces to defining invariant factors

-matrices to canonical form reduces to defining invariant factors

,

,

;

; ,

,

Where r– rank  -matrices;

-matrices;  – largest common divisor minors k-th order, taken with a leading coefficient equal to 1.

– largest common divisor minors k-th order, taken with a leading coefficient equal to 1.

Example. Let it be given  -matrix

-matrix

.

.

Solution. Obviously, the greatest common divisor of the first order D 1

=1, i.e.  .

.

Let's define second-order minors:

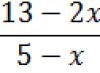

|

|

|

This data alone is enough to draw the following conclusion: D 2

=1, therefore,  .

.

We define D 3

,

,

Hence,  .

.

Thus, the canonical form of this matrix is the following  -matrix:

-matrix:

.

.

A matrix polynomial is an expression of the form

Where  – variable;

– variable;  – square matrices of order n with numerical elements.

– square matrices of order n with numerical elements.

If  , That S is called the degree of the matrix polynomial, n– the order of the matrix polynomial.

, That S is called the degree of the matrix polynomial, n– the order of the matrix polynomial.

I like quadratic  -matrix can be represented as a matrix polynomial. Obviously, the opposite statement is also true, i.e. any matrix polynomial can be represented as some square

-matrix can be represented as a matrix polynomial. Obviously, the opposite statement is also true, i.e. any matrix polynomial can be represented as some square  -matrices.

-matrices.

The validity of these statements clearly follows from the properties of operations on matrices. Let's look at the following examples:

Example. Represent a polynomial matrix

in the form of a matrix polynomial as follows

.

.

Example. Matrix polynomial

can be represented as the following polynomial matrix (  -matrices)

-matrices)

.

.

This interchangeability of matrix polynomials and polynomial matrices plays a significant role in the mathematical apparatus of factor and component analysis methods.

Matrix polynomials of the same order can be added, subtracted, and multiplied in the same way as ordinary polynomials with numerical coefficients. It should, however, be remembered that multiplication of matrix polynomials, generally speaking, is not commutative, since Matrix multiplication is not commutative.

Two matrix polynomial are called equal if their coefficients are equal, i.e. corresponding matrices for the same powers of the variable  .

.

The sum (difference) of two matrix polynomials  And

And  is a matrix polynomial whose coefficient for each power of the variable

is a matrix polynomial whose coefficient for each power of the variable  equal to the sum (difference) of coefficients at the same degree

equal to the sum (difference) of coefficients at the same degree  in polynomials

in polynomials  And

And  .

.

To multiply a matrix polynomial  to a matrix polynomial

to a matrix polynomial  , you need each term of the matrix polynomial

, you need each term of the matrix polynomial  multiply by each term of the matrix polynomial

multiply by each term of the matrix polynomial  , add the resulting products and bring similar terms.

, add the resulting products and bring similar terms.

Degree of matrix polynomial – product

less than or equal to the sum of the powers of the factors.

less than or equal to the sum of the powers of the factors.

Operations on matrix polynomials can be carried out using operations on the corresponding  -matrices.

-matrices.

To add (subtract) matrix polynomials, it is enough to add (subtract) the corresponding  -matrices. The same applies to multiplication.

-matrices. The same applies to multiplication.  -the matrix of the product of matrix polynomials is equal to the product

-the matrix of the product of matrix polynomials is equal to the product  -matrices of factors.

-matrices of factors.

Example.

|

|

|

On the other side  And

And  can be written in the form

can be written in the form

|

|

|

Since matrix multiplication is not commutative, for matrix polynomials two divisions with a remainder are defined - right and left.

Let two matrix polynomials of order n be given

Where IN 0 is a non-singular matrix.

When dividing  on

on  there is a unique right quotient

there is a unique right quotient  and right remainder

and right remainder

where is the degree R 1

less degree  , or

, or  (division without remainder), as well as the left quotient

(division without remainder), as well as the left quotient  and left remainder

and left remainder

where is the degree  less degree

less degree  , or

, or  =0 (division without remainder).

=0 (division without remainder).

Generalized Bezout's theorem. When dividing a matrix polynomial  to a polynomial

to a polynomial  the right remainder is equal to the right value of the dividend

the right remainder is equal to the right value of the dividend  at

at  , i.e. matrix

, i.e. matrix

and the left remainder – to the left value of the dividend  at

at  , i.e. matrix

, i.e. matrix

Proof. The proof of the validity of both formulas (3.4.1) and (3.4.2) is carried out in the same way, by direct substitution. Let's prove one of them.

So, the dividend is  , divider -

, divider -  , as a quotient we have the polynomial

, as a quotient we have the polynomial

Let's define the product  :

:

or

Q.E.D.

Consequence.

is divisible from the right (left) by a polynomial

is divisible from the right (left) by a polynomial  then and only when

then and only when  equals 0.

equals 0.

Example. Show that the matrix polynomial

is divisible by a matrix polynomial  ,

,

Where  , left without remainder.

, left without remainder.

Solution. Indeed, the equality is true

Where

Let's calculate the value of the left remainder using Bezout's theorem

Matrices are a convenient tool for solving a wide variety of algebraic problems. Knowing some simple rules for operating with them allows you to reduce matrices to any convenient and necessary in at the moment forms. It is often useful to use the canonical form of the matrix.

Instructions

- Remember that the canonical form of the matrix does not require that there be ones along the entire main diagonal. The essence of the definition is that the only non-zero elements of the matrix in its canonical form are ones. If they are present, they are located on the main diagonal. Moreover, their number can vary from zero to the number of lines in the matrix.

- Do not forget that elementary transformations allow any matrix to be reduced to the canonical mind. The biggest difficulty is to intuitively find the simplest sequence of chains of actions and not make mistakes in the calculations.

- Learn the basic properties of operations with rows and columns in a matrix. Elementary transformations include three standard transformations. This is the multiplication of a matrix row by any non-zero number, the summation of rows (including addition to one another, multiplied by some number) and their rearrangement. Such actions allow us to obtain a matrix equivalent to this one. Accordingly, you can perform such operations on columns without losing equivalence.

- Try not to perform several elementary transformations at once: move from stage to stage to prevent accidental mistakes.

- Find the rank of the matrix to determine the number of ones on the main diagonal: this will tell you what the final form you are looking for will be. canonical form, and will eliminate the need to perform transformations if you just want to use it for a solution.

- Use the bordering minors method to follow the previous recommendation. Calculate the kth order minor, as well as all surrounding minors of degree (k+1). If they are equal to zero, then the rank of the matrix is the number k. Do not forget that the minor Mij is the determinant of the matrix obtained by deleting row i and column j from the original one.

A matrix of dimensions is said to have canonical form, if it can be divided into four blocks (some of them may be empty), each of which is a submatrix of a certain type ( submatrix is called a matrix that is part of the original matrix). The top left block is the identity matrix k-th order, two lower blocks – matrices of dimensions and ![]() consisting of zeros (in the diagram these matrices are indicated by large bold zeros). Top right block – arbitrary matrix dimensions. Number k> 0 and does not exceed numbers m And n.

consisting of zeros (in the diagram these matrices are indicated by large bold zeros). Top right block – arbitrary matrix dimensions. Number k> 0 and does not exceed numbers m And n.

If , there are no right blocks, if , there are no bottom (zero) blocks. If , the matrix consists of one (unit) block.

Let's bring specific examples matrices having a canonical form (the dots indicate those elements of the matrices specific values which do not play a role):

A)  , b) , c) , d)

, b) , c) , d)  .

.

In example a) , ( k coincides with the number of rows), both zero submatrices are missing; in example b) ( k coincides with the number of columns), , both right blocks are missing, the zero submatrix is a row matrix; in example c), the first zero submatrix is a row matrix, the second zero submatrix consists of one element; in example d) , , .

Often, in the definition of a matrix of canonical form, instead of a unit submatrix, a triangular submatrix appears. In this case we talk about the matrix almost canonical kind. Since the identity matrix is special case triangular, matrix of canonical form is a special case of matrices of almost canonical form. If in a schematic representation of a matrix of a canonical form the identity matrix in the upper left block is replaced by a triangular one, a schematic of a matrix of an almost canonical form will be obtained.

Let us give examples of matrices that have an almost canonical form:

A)  , b) , c)

, b) , c)  , G)

, G)  .

.

The following matrix transformations are called acceptable: rearrangement of strings; rearrangement of columns; multiplying the elements of a matrix row by the same number other than zero; adding to one of the rows of the matrix another row, previously multiplied by a certain number (in particular, subtracting one row from another and adding one row to another). As will be shown below, admissible transformations of matrices correspond to those actions with systems linear equations, which do not violate equivalence.

Using admissible transformations, any matrix A can be reduced to a matrix having canonical view.

Reducing a matrix to canonical form can be divided into stages, each of which consists of two steps - obtaining the next unit on the main diagonal and turning the corresponding column into unit a column, that is, one in which all elements, with the exception of the diagonal, are equal to zero.

The first step is carried out as follows. If the diagonal element in question equal to one, move on to the second step. If the diagonal element is not equal to one, but is different from zero, divide all the elements of its row by it. If the diagonal element is equal to zero, then we will look for a non-zero element located either in its (diagonal element’s) column, but below, or in its row, but to the right, or below and to the right at the same time. If such an element is found, we will make it diagonal by rearranging the corresponding rows (in the first case), or columns (in the second), or rows and columns in turn (in the third). If such an element is not found, this will mean that the process is completed.

If the first step is completed, and the column in which the new unit diagonal element is located contains another non-zero element, add to its row the row of the diagonal element multiplied by the element to be destroyed, taken with the opposite sign.

Let's consider an example of reducing a matrix to canonical form.

~ ~

~ ~  ~

~

First diagonal First diagonal

element is zero. element is non-null.

~ ~  ~

~  ~

~

First diagonal

element became equal to one

~  ~ ~

~ ~  ~

~

A matrix is a special object in mathematics. Shown in a rectangular or square table, made up of a certain number rows and columns. In mathematics there is a wide variety of types of matrices, varying in size or content. The numbers of its rows and columns are called orders. These objects are used in mathematics to organize the recording of systems of linear equations and conveniently search for their results. Equations using a matrix are solved using the method of Carl Gauss, Gabriel Cramer, minors and algebraic additions, as well as in many other ways. The basic skill when working with matrices is reduction to standard view. However, first, let's figure out what types of matrices are distinguished by mathematicians.

Null type

All components of this type of matrix are zeros. Meanwhile, the number of its rows and columns is completely different.

Square type

The number of columns and rows of this type of matrix is the same. In other words, it is a “square” shaped table. The number of its columns (or rows) is called the order. Special cases include the existence of a second-order matrix (2x2 matrix), fourth order(4x4), tenth (10x10), seventeenth (17x17) and so on.

Column vector

This is one of the simplest types of matrices, containing only one column, which includes three numerical values. She represents a series free members(numbers independent of variables) in systems of linear equations.

View similar to the previous one. Consists of three numerical elements, in turn organized into one line.

Diagonal type

Numerical values in the diagonal form of the matrix take only components of the main diagonal (highlighted green). The main diagonal begins with the element located in the upper right corner and ends with the number in the third column of the third row. The remaining components are equal to zero. The diagonal type is only a square matrix of some order. Among the diagonal matrices, one can distinguish the scalar one. All its components take same values.

A subtype of diagonal matrix. All of her numeric values are units. Using a single type of matrix table, one performs its basic transformations or finds a matrix inverse to the original one.

Canonical type

The canonical form of the matrix is considered one of the main ones; Reducing to it is often necessary for work. The number of rows and columns in a canonical matrix varies; it does not necessarily belong to square type. It is somewhat similar to the identity matrix, but in its case not all components of the main diagonal take on the value equal to one. There can be two or four main diagonal units (it all depends on the length and width of the matrix). Or there may be no units at all (then it is considered zero). The remaining components of the canonical type, as well as the diagonal and unit elements, are equal to zero.

Triangular type

One of the most important types of matrix, used when searching for its determinant and when performing simple operations. The triangular type comes from the diagonal type, so the matrix is also square. The triangular type of matrix is divided into upper triangular and lower triangular.

In an upper triangular matrix (Fig. 1), only elements that are above the main diagonal take a value equal to zero. The components of the diagonal itself and the part of the matrix located under it contain numerical values.

In the lower triangular matrix (Fig. 2), on the contrary, the elements located in the lower part of the matrix are equal to zero.

The view is necessary to find the rank of a matrix, as well as for elementary operations on them (along with triangular type). The step matrix is so named because it contains characteristic "steps" of zeros (as shown in the figure). In the step type, a diagonal of zeros is formed (not necessarily the main one), and all elements under this diagonal also have values equal to zero. A prerequisite is the following: if there is a zero row in the step matrix, then the remaining rows below it also do not contain numerical values.

So we looked at most important types matrices necessary to work with them. Now let's look at the problem of converting the matrix into the required form.

Reducing to triangular form

How to bring a matrix to a triangular form? Most often in tasks you need to transform a matrix into a triangular form in order to find its determinant, otherwise called a determinant. When performing this procedure, it is extremely important to “preserve” the main diagonal of the matrix, because the determinant of a triangular matrix is equal to the product of the components of its main diagonal. Let me also recall alternative methods for finding the determinant. The determinant of the square type is found using special formulas. For example, you can use the triangle method. For other matrices, the method of decomposition by row, column or their elements is used. You can also use the method of minors and algebraic matrix additions.

Let us analyze in detail the process of reducing a matrix to a triangular form using examples of some tasks.

Task 1

It is necessary to find the determinant of the presented matrix using the method of reducing it to triangular form.

The matrix given to us is a third-order square matrix. Therefore, to convert it to triangular shape we need to turn two components of the first column and one component of the second to zero.

To bring it to triangular form, we start the transformation from the lower left corner of the matrix - from the number 6. To turn it to zero, multiply the first row by three and subtract it from the last row.

Important! The top row does not change, but remains the same as in the original matrix. There is no need to write a string four times larger than the original one. But the values of the strings whose components need to be set to zero are constantly changing.

All that's left is last value- element of the third row of the second column. This is the number (-1). To turn it to zero, subtract the second from the first line.

Let's check:

detA = 2 x (-1) x 11 = -22.

This means that the answer to the task is -22.

Task 2

It is necessary to find the determinant of the matrix by reducing it to triangular form.

The presented matrix belongs to the square type and is a fourth-order matrix. This means that it is necessary to turn three components of the first column, two components of the second column and one component of the third to zero.

Let's start converting it from the element located in the lower left corner - from the number 4. We need to reverse given number to zero. The easiest way to do this is to multiply the top line by four and then subtract it from the fourth. Let's write down the result of the first stage of transformation.

So the fourth row component is set to zero. Let's move on to the first element of the third line, to the number 3. We perform a similar operation. We multiply the first line by three, subtract it from the third line and write down the result.

We managed to turn to zero all the components of the first column of this square matrix, with the exception of the number 1 - an element of the main diagonal that does not require transformation. Now it is important to preserve the resulting zeros, so we will perform the transformations with rows, not with columns. Let's move on to the second column of the presented matrix.

Let's start again at the bottom - with the element of the second column of the last row. This number is (-7). However, in in this case It is more convenient to start with the number (-1) - the element of the second column of the third row. To turn it to zero, subtract the second from the third line. Then we multiply the second line by seven and subtract it from the fourth. We got zero instead of the element located in the fourth row of the second column. Now let's move on to the third column.

In this column we need to turn only one number to zero - 4. This is not difficult to do: just add to last line the third and we see the zero we need.

After all the transformations made, we brought the proposed matrix to a triangular form. Now, to find its determinant, you only need to multiply the resulting elements of the main diagonal. We get: detA = 1 x (-1) x (-4) x 40 = 160. Therefore, the solution is 160.

So, now the question of reducing the matrix to triangular form will not bother you.

Reducing to a stepped form

For elementary operations on matrices, the stepped form is less “in demand” than the triangular one. It is most often used to find the rank of a matrix (i.e., the number of its non-zero rows) or to determine linearly dependent and independent rows. However, the stepped type of matrix is more universal, as it is suitable not only for the square type, but also for all others.

To reduce a matrix to stepwise form, you first need to find its determinant. The above methods are suitable for this. The purpose of finding the determinant is to find out whether it can be converted into a step matrix. If the determinant is greater or less than zero, then you can calmly begin the task. If it is equal to zero, it will not be possible to reduce the matrix to a stepwise form. In this case, you need to check whether there are any errors in the recording or in the matrix transformations. If there are no such inaccuracies, the task cannot be solved.

Let's look at how to reduce a matrix to a stepwise form using examples of several tasks.

Task 1. Find the rank of the given matrix table.

Before us square matrix third order (3x3). We know that to find the rank it is necessary to reduce it to a stepwise form. Therefore, first we need to find the determinant of the matrix. Let's use the triangle method: detA = (1 x 5 x 0) + (2 x 1 x 2) + (6 x 3 x 4) - (1 x 1 x 4) - (2 x 3 x 0) - (6 x 5 x 2) = 12.

Determinant = 12. He greater than zero, which means that the matrix can be reduced to a stepwise form. Let's start transforming it.

Let's start it with the element of the left column of the third line - the number 2. Multiply the top line by two and subtract it from the third. Thanks to this operation, both the element we need and the number 4 - the element of the second column of the third row - turned to zero.

We see that as a result of the reduction, triangular matrix. In our case, we cannot continue the transformation, since the remaining components cannot be reduced to zero.

This means that we conclude that the number of rows containing numerical values in this matrix (or its rank) is 3. The answer to the task: 3.

Task 2. Determine the number of linearly independent rows of this matrix.

We need to find strings that cannot be converted to zero by any transformation. In fact, we need to find the number of non-zero rows, or the rank of the presented matrix. To do this, let us simplify it.

We see a matrix that does not belong to the square type. It measures 3x4. Let's also start the reduction with the element of the lower left corner - the number (-1).

Its further transformations are impossible. This means that we conclude that the number of linearly independent lines in it and the answer to the task is 3.

Now reducing the matrix to a stepped form is not an impossible task for you.

Using examples of these tasks, we examined the reduction of a matrix to a triangular form and a stepped form. To make it zero required values matrix tables, in in some cases you need to use your imagination and correctly convert their columns or rows. Good luck in mathematics and in working with matrices!

,

, .

. ,

,